Hosting the Blog for Free using GCP and CockroachDB

Jan 26, 2025Welcome back, and Happy New Year! I planned to publish this post weeks ago, but during the holidays, I finally managed to avoid opening my laptop. In the previous posts, I described creating a back-end using Directus and a front-end using Angular. But it still runs in the development environment, so now let's look at how to host it - for free.

My Background Story

A few years ago, I met a friend who proudly told me his business had a new website. I quickly opened it; it looked good, so I was interested in the details: who created it, what framework it uses, what the costs are, etc. The site was implemented by an individual without any coding knowledge using WordPress. She was also the site designer. The project was cheap, and what excited me the most was that there were no hosting fees.

To gain more insights I asked another developer about his hosting costs who I knew was creating websites using WordPress. He had a small server where the sites were running. It was cheap so he didn't ask for any money for hosting from his customers, but the server could only take around 10 sites and if any of these had a high usage, the others might not work. Not to mention that the database and other infrastructure need to be updated manually from time to time.

So I started thinking. If I were to get a request to create a site for a small business, how would I be competitive with this offer? It was cheap, implemented quickly, hosted for free, and even if it contains bugs, it works. I could reason that the website I implement would be more professional, using cloud technologies, including database backup and test automation, so there would be fewer issues; the answer would probably still be "ok, but it is expensive and the cheaper solution also works, so that's fine for me".

To reduce the implementation costs I needed a CMS - that's Directus. But how can I host a combination of Directus and Angular preferably for free but at least with minimum cost without giving up on cloud technologies? Let's see.

Hosting In the Cloud

As a starting point, I had one requirement that I wished to keep: use a PaaS-based cloud hosting option. If I need to manage multiple small projects, I don't want to be responsible for keeping the infrastructure up-to-date. And the same applies to the database. Instead of hosting a server and maintaining one or multiple self-hosted databases running there, I would like to use database-as-a-service. It is quite a restriction when selecting between providers, but a flexible, cloud-based, scalable deployment option will be beneficial in the long run.

Hosting the Database

I thought selecting a database hosting option would be easy, but I spent a considerable amount of time checking the pricing plans of different providers. Finding a free server where I could host my database would be possible, but as I said, I wanted to avoid it.

Directus supports multiple database clients: PostgreSQL, MySQL, Oracle Database, MS SQL, SQLite, and CockroachDB.

SQLite Cloud supports SQLite, offering high concurrency, strong consistency, dynamic scalability, and multiple regions, but it's free for only one project with 1GB of storage and limited resources. Besides that SQLite is not a recommended option for productive sites.

PostgreSQL and MySQL have similar options. Render.com first seemed like a promising option so I gave it a try. But what I didn't realize in the pricing description is that free instances are only available for a month, after that you need to upgrade to a paid plan that costs at least 19 USD.

Heroku also provides Heroku Postgres but it offers only 1 GB storage for 5 USD monthly. Not bad, but limited and not free.

Finally, there is Neon. It has a free plan offering 0.5 GB storage for 10 projects. It might be enough for one Directus instance, but two small ones might run out of space. The next plan is 19 USD which is not cheap. There are many more options for hosting these clients but I couldn't find any fitting one, as there is always a strict resource or time limit.

I didn't put any effort into MS SQL or Oracle Database clients as I didn't expect any suitable options here, but CockroachDB caught my attention. It offers 50 million Resource Units and 10 GB of storage for free per month. This amount is shared between clusters, but if you create dedicated customer accounts and create a productive database there, it should be more than enough.

Hosting the Site

There are many many options for hosting your website. I'll not describe the list of options I considered but rather focus on the outcome, which is Google Cloud Platform (GCP).

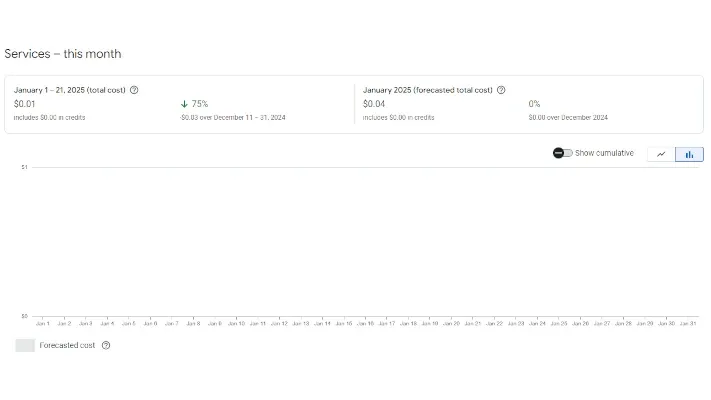

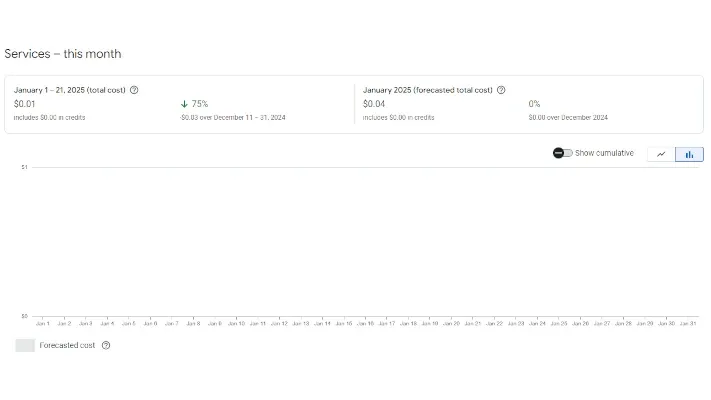

Based on my experience Directus will not start in milliseconds. So we need an option to keep it alive. App Engine has a generous free tier for a standard environment offering 28 hours per day (for F1 instances) with 1 GB of outbound data transfer. For a small website, having a single instance should be enough, so we have a place to host Directus. Any time additional resources are required we can update the configuration and payment will still be calculated only by the amount of resources used.

But we still need to run the front-end and Directus will consume 24 out of the free 28 hours. That's why I used Cloud Run for this. It also offers free tier pricing including:

- CPU: First 240,000 vCPU-seconds free per month

- Memory: First 450,000 GiB-seconds free per month

A small website will probably stay within these limits but even if you run out of this quota, you only pay for the extra resources used.

Now we have the technology to run the website. But how can we deploy the application? Let's take a look at it.

Continuous Deployment

Following the GCP guides, the deployment of Directus and Angular is quite straightforward. App Engine's standard environment doesn't allow custom Docker images. What we need is an app.yaml file that defines the environment and a cloudbuild.yaml file to define the build configuration for Cloud Build. But there is an important question. As Directus uses a database to store nearly every important data, how can we migrate the collections and settings from development to production? The first official option I found is using the Directus CLI. But unfortunately, it only migrates the collections without any settings, roles, or permissions. The next option is directus-sync CLI. It provides configuration options to define which collections to migrate. As I need to change the permissions of the public role, I have to use this option.

This was the first time I faced the drawbacks of CockroachDB. It is not the primary database client of Directus. I started development using SQLite locally and tried to migrate to a productive CockroachDB database. It failed... many times. For example, the ID of certain collections was created with type string instead of UUID. SQLite didn't complain, but CockroachDB did. So I had to create at least the base of the collections to kick off the migration. Since then I have used a CockroachDB instance for local development as well. There are still issues during migration, but at least less. So my advice is, do not use CockroachDB if you can afford about 5 USD a month. For this price, you can already use a Heroku Postgres instance that will be much easier to maintain in the long run.

runtime: "nodejs18"

instance_class: "F1"

automatic_scaling:

min_instances: 1

max_instances: 1

env_variables:

your_directus_variables: here

The deployment using Node runtime will use the scripts of package.json to build and start the application. Here I used a dynamic script to process all extensions, but you can hard code it if you have just one.

"scripts": {

"postinstall": "for d in ./extensions/*; do (cd \"$d\" && npm ci); done",

"build": "npm run build:extensions",

"build:extensions": "for d in ./extensions/*; do (cd \"$d\" && npm run build); done",

"start": "npx directus start"

}

The last step is to enable continuous deployment using Cloud Build. It can be configured using a cloudbuild.yaml file. It will start the deployment using App Engine and use directus-sync to update the database.

steps:

- name: 'gcr.io/google.com/cloudsdktool/cloud-sdk'

entrypoint: 'bash'

args: ['-c', 'gcloud config set app/cloud_build_timeout 900 && gcloud app deploy']

- name: 'node:18'

entrypoint: 'npx'

args: ['directus-sync', 'push', '-u', '$_DIRECTUS_URL', '-t', '$_DIRECTUS_TOKEN']

options:

substitutionOption: ALLOW_LOOSE

logging: CLOUD_LOGGING_ONLY

timeout: '900s'

In contrast, Cloud Run supports Docker, so we can create a custom image using a Dockerfile for the front end.

FROM node:18-alpine AS build

WORKDIR /app

COPY package.json package-lock.json ./

RUN npm ci

COPY . .

RUN npm run build

CMD ["npm", "run", "serve:ssr:blog"]

EXPOSE 4000

After creating this Dockerfile we can create a new service in Cloud Run. During the steps, we can select deployment from GitHub, which will automatically create a Cloud Build trigger.

As a final step, to avoid costs we need to set up policies in Artifact Registry. Both builds will deploy images, and after 0.5 GB, it will mean costs for our project. So I usually create two policies: one to discard old builds, and another to keep one or two of the latest versions.

Conclusion

And that's all. Our application is running using CockroachDB, App Engine, Cloud Run, and Cloud Build. Of course, this description includes only the basics, but I hope it can help you to get started with similar projects. You can for example additionally use Cloud Storage - which also comes with a free tier - to store images uploaded using Directus.

Coming Next

I have been waiting for a long time, but finally, I can start implementing user registration so you can like the posts. So what's coming next is:

- Enabling user registration

- Adding a "like" feature

- Allowing comments

- Implementing a favorites system

- Managing post-lifecycles through workflows

- Generating post summaries with AI

- Sending email notifications when a new post is created